Take Control of Your Data: Run Your Own Private ChatGPT

AI is everywhere. Companies are racing to add it to everything they can, whether you want it there or not. So, this brings up a lot a questions about how secure our private data really is. Are they using your information to train their AI? Are they selling it? How secure it is? We have already seen case where peoples AI conversations are appearing in Google searches. You might be inclined to just not use or disable AI features, but you may start to fall behind the people who do use these features.

How can you utilize AI and keep your data private?

There is a way to use AI and keep your data private. You can set up your own local ChatGPT like service where your data never leaves your private network.

What can it do for you?

Much of what you can do with the “less” private AI services. Here are few ideas:

- You can configure models to read in documents. This will then allow you to ask the AI targeted questions about the documents without needing to train a custom model.

- Feed in internal documentation and ask the AI for answers rather than search through the documents trying to find answers

- Pull in your personal notes. Ask questions like “When did I last speak to client X about subject Y?”

- You can create a model based on an existing model with a pre-supplied prompt.

- Create a model designed to help you compose marketing materials.

- Create a model to help you create a requirements document.

- There are browser extensions that work with Ollama, such as Orian that allow you to chat/interact with a website’s content.

- Summarize the web page

- Draft an email response in GMail

- You can build custom AI-powered automations with services like n8n.

- Read new emails, summarize them, and put actions items into your preferred to-do list app

- An email auto responders for commonly asked questions

Getting Started

While getting the base up and running isn’t overly complex, it does require some technical knowledge. Please continue reading, and if you have any questions, I’m here to help!

Open WebUI

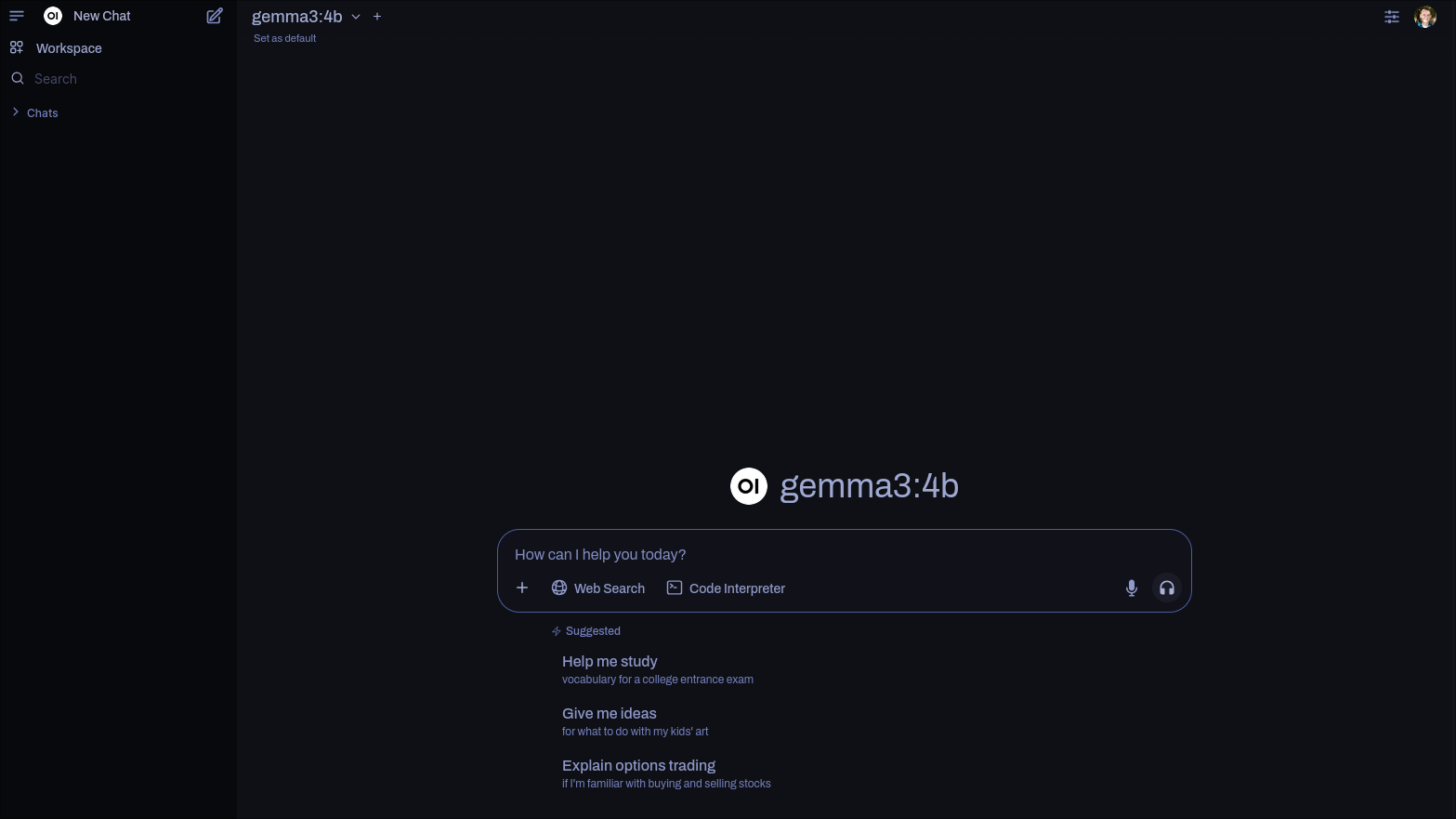

There is a relatively easy way to setup a service very much like ChatGPT. Enter open-webui. It will provide as user interface very similar to ChatGPT with the ability to use local AI models.

Ollama

Ollama is a service that you can run on your local network that allows Open WebUI to connect to AI models you have installed locally. Check out ollama.com/search to see a list of all the available models.

Setup

There are a number of ways to get this setup in your local environment, but the easiest is probably with Docker, as outlined in Open WebUI’s documentation. If you have docker-compose here is a simple docker-compose.yml to get you started:

services:

webui:

image: ghcr.io/open-webui/open-webui:main

ports:

- 8080:8080/tcp

environment:

- OLLAMA_BASE_URL=http://ollama:11434

volumes:

- openwebui:/app/backend/data

depends_on:

- ollama

ollama:

image: ollama/ollama:latest

environment:

- OLLAMA_ORIGINS="*"

ports:

- 11434:11434/tcp

volumes:

- ollama:/root/.ollama

deploy:

resources:

reservations:

devices:

- driver: nvidia

count: all

capabilities: [gpu]

volumes:

openwebui:

ollama:

NOTE: This assumes you are using an Nvidia GPU. If not, you will need to modify it accordingly.

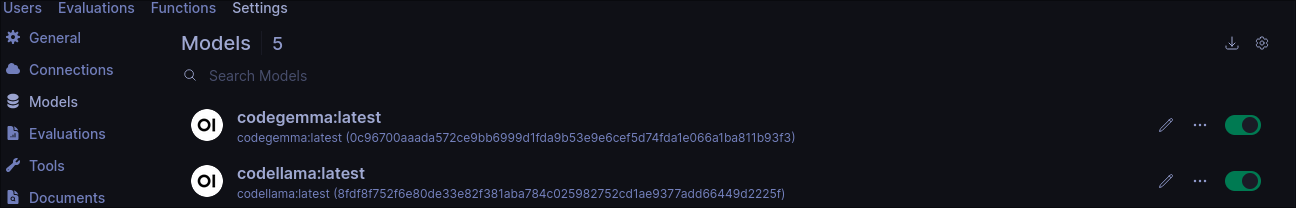

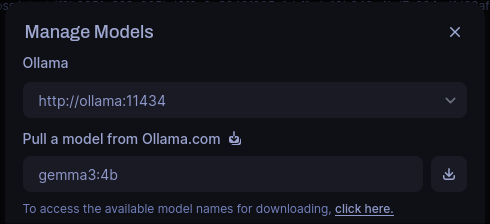

Once you have it up and running, you will need to install at least one model from the list I linked earlier. You can install the model right in Open WebUI in the admin settings.

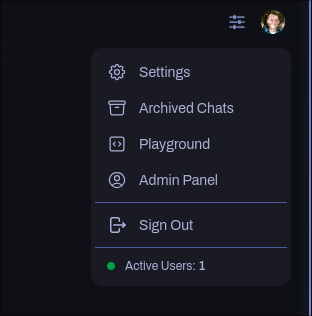

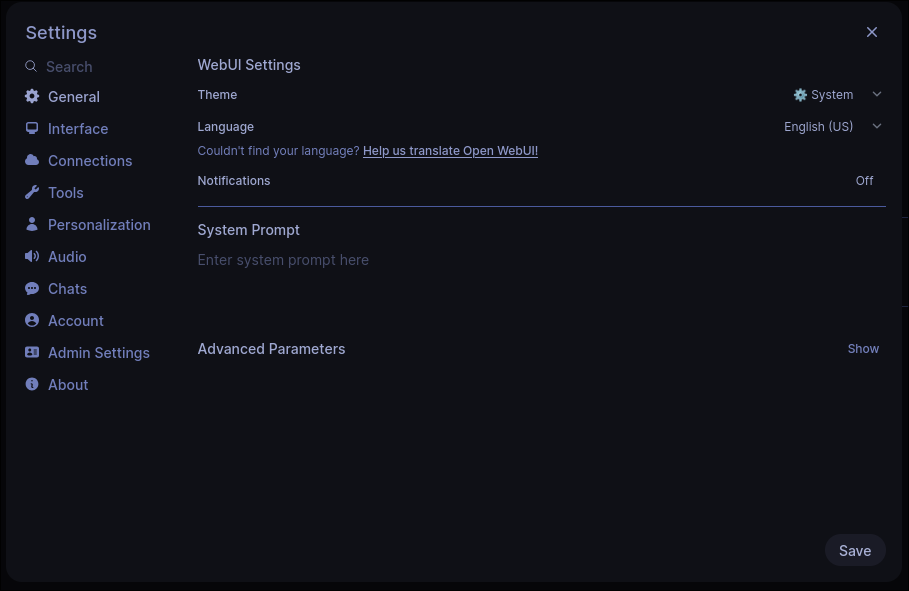

- Go to the User menu on the top left and click Settings->Admin Settings->Models.

- Click on the download icon in the top right.

- Enter the model tag you want in the “Pull a Model from Ollama.com” input box.

- Click the download button next to the model tag.

Now when you click on “New Chat” the newly added model should already be selected, and you will see page that looks very similar to the ChatGPT.

Feeling Overwhelmed?

If this is all a bit overwhelming, I am here to help. Schedule a Free 30-Minute Consultation to see how I can improve your process.